Restyling with InstantStyle

As powerful models proliferate throughout tech's major players, it becomes more and more critical to keep smaller developers in the game. Each low-cost tool which arms the GPU-poor helps ensure that a few centralized powers don't run away with the AI market. Recently we've seen huge strides in this area, including various flavours of LORA for fine-tuning, quantization for efficient inference, and zero- and few-shot learning techniques. The final category is especially appealing, given its low cost and exceptional accessibility.

"Making the complicated simple, awesomely simple, that's creativity" - Tom Ford

Falling into that aspirational last bucket is "InstantStyle", a zero-shot technique for styling stable diffusion image generations. Basically, a reference image embedding is injected into particular layers of the model as it produces a new image.

The technique is effective for both text->image and controlnet-based image generations. With controlnet, you can take apply a reference image's style to an existing image's structure. Edges will remain unchanged, but the new style will be applied. We'll take a look at both modes of operation, all using Stable Diffusion XL.

I'm personally excited for this development, since I've been hunting for excuses to play with stable diffusion (like my AI-powered art frame). Naturally, a GPU-lite approach such as we see here is a great tool to take for a test drive.

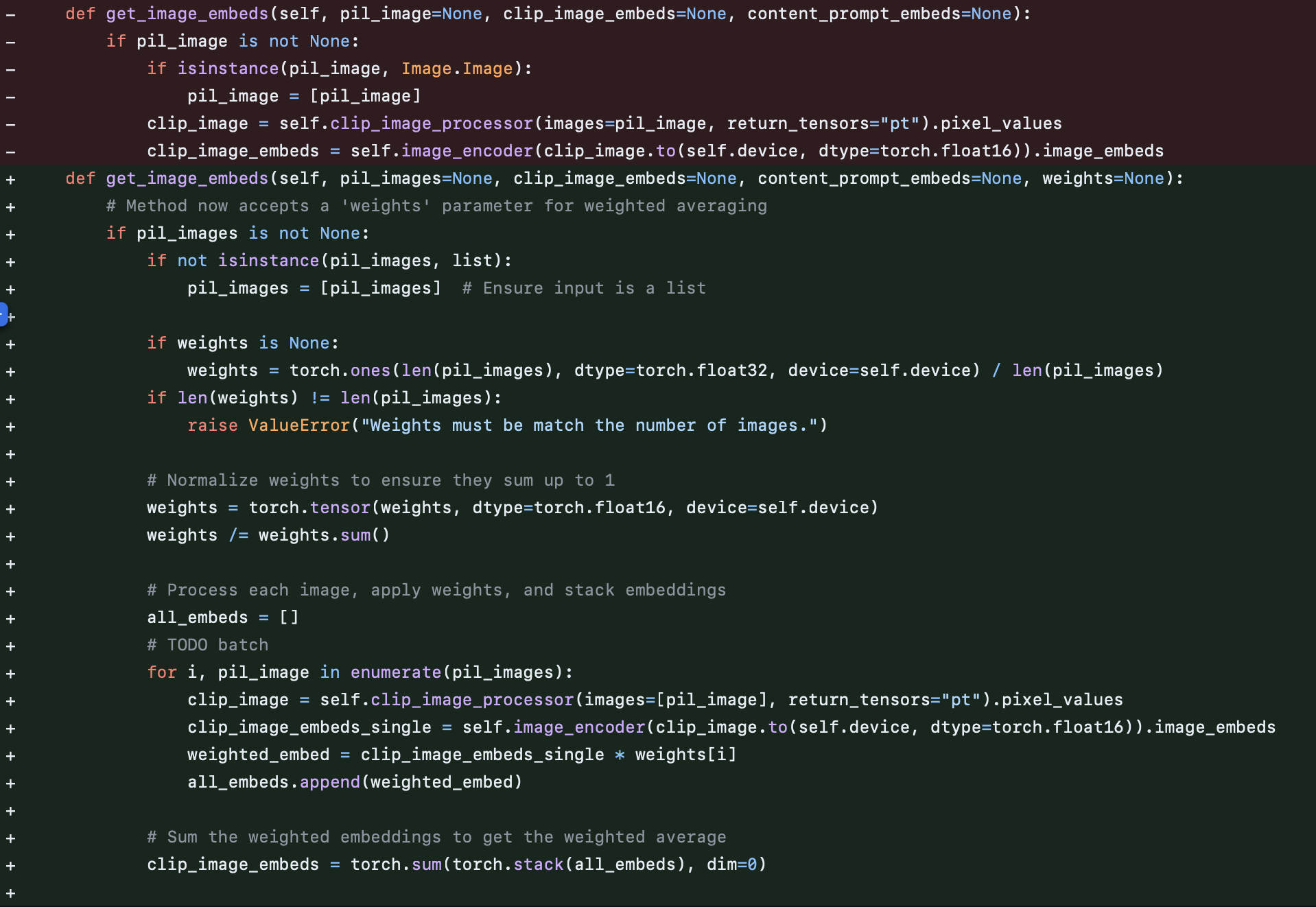

But first, I chose to extend the provided method slightly. Working with a singular reference image is already impressive, but supporting multiple references is more flexible. And, I think more closely aligns with certain workflows, (eg. tattoo design, as we'll see soon). The extension to support a list of reference images with varying weights was quite straightforward. Note some normalization is added so that we're still operating at the same scale as if we had a single reference image.

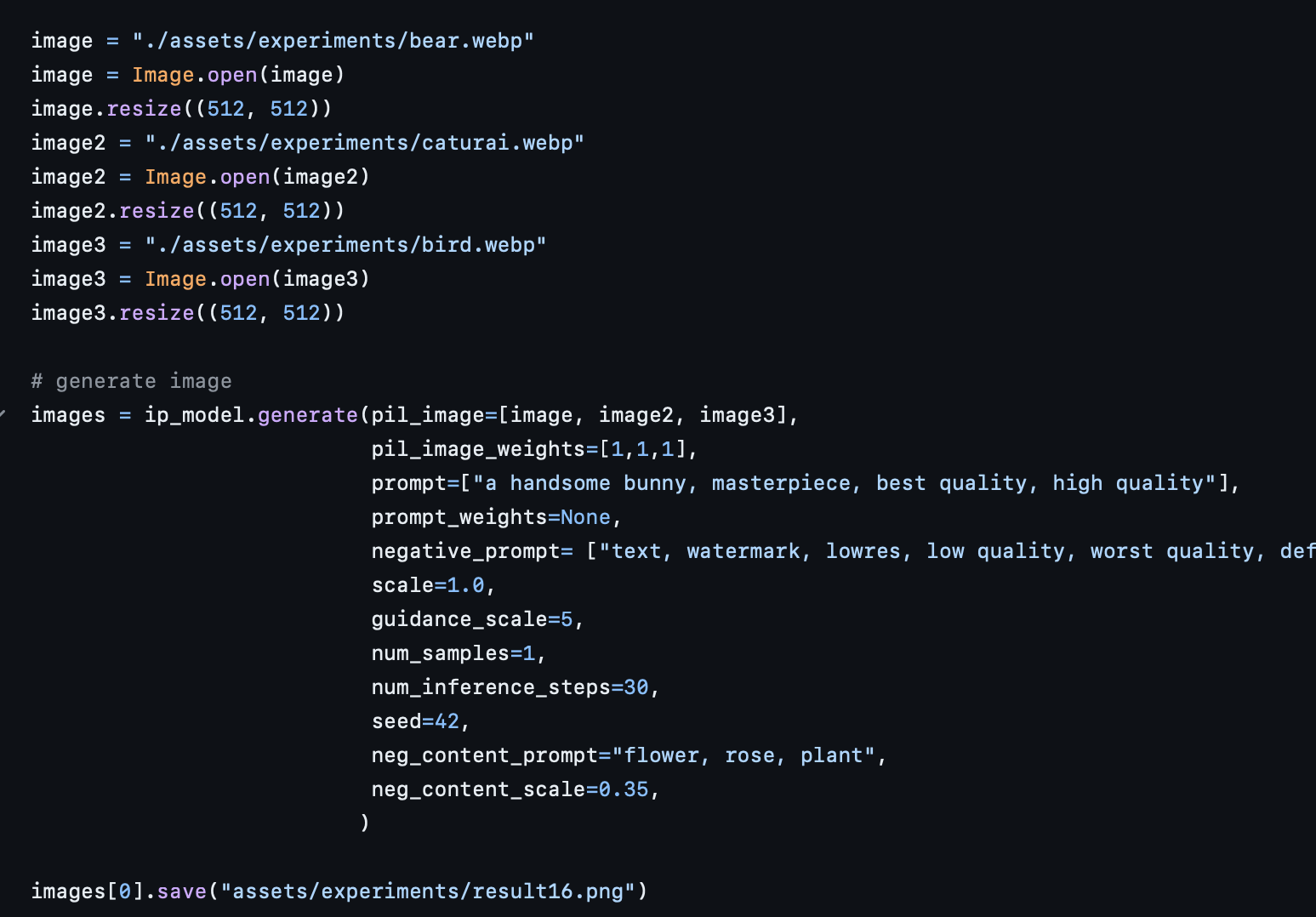

With this in hand, we can start experimenting. I took it for a spin on lightning.ai, as it's quite convenient for grabbing a right-sized GPU for this sort of exploration. For this application, any GPU will be performant for text->image, so I just grab the cheapest option with a low wait time (usually T4). Slightly more resources were used by the controlnet, so I opted for an L4 for that work.

Working with multiple references

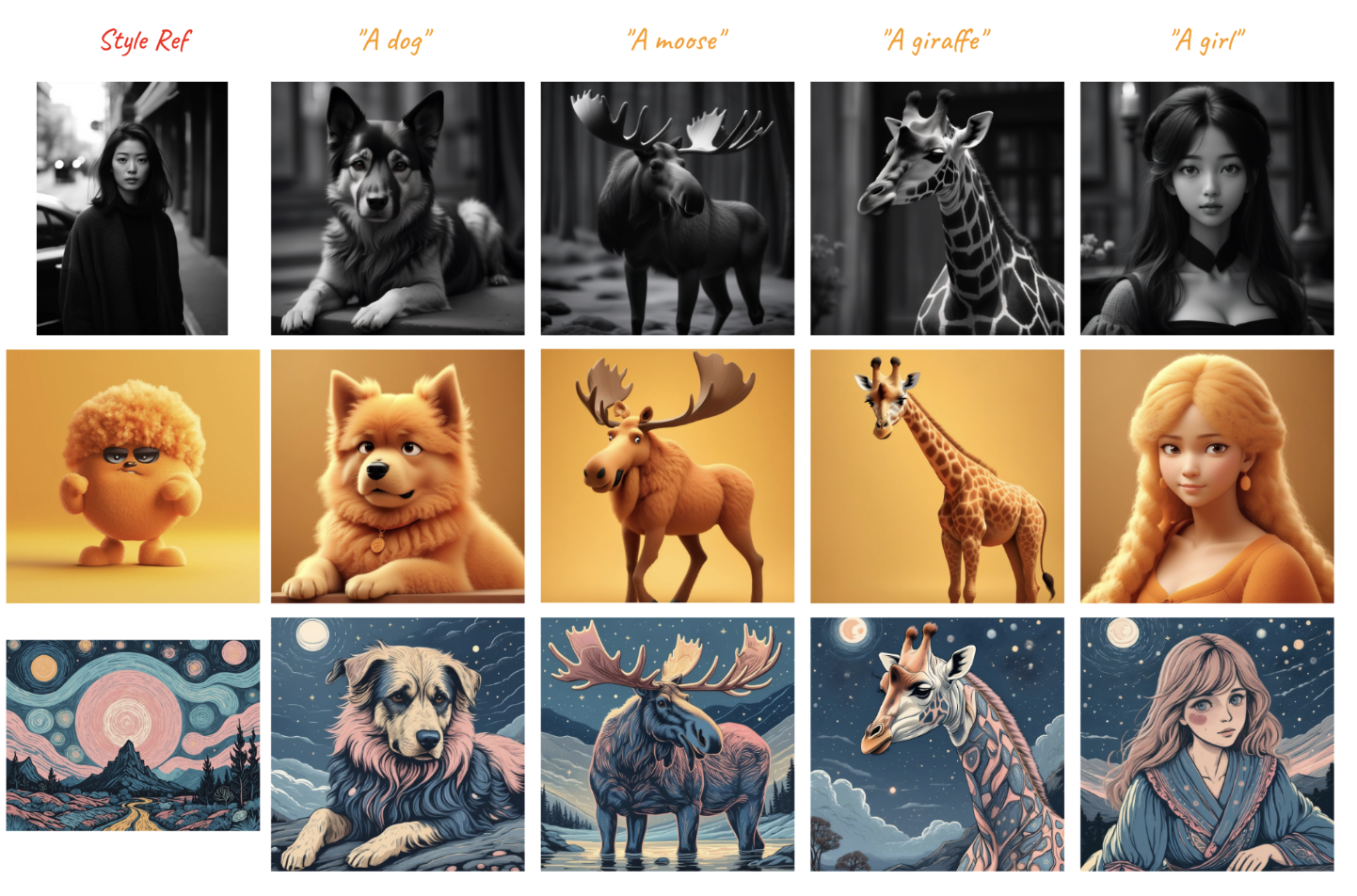

I started by experimenting with the style images provided by the InstantStyle authors. I used the cartoonified-wolf, the realistic photo of a woman surrounded by flowers, and a sort of spirally landscape. I produced a hawk, initially using a balanced blends of all 3 styles:

We see the flower's content seemingly bleeding into the image here. The author's suggest correcting for that using a negative prompt, but in our case, we can also reweight the reference images to reduce the flowers' influence. We also take a look at what happens if we weight more heavily towards the cartoon animal or the spirals:

Tattoo styling

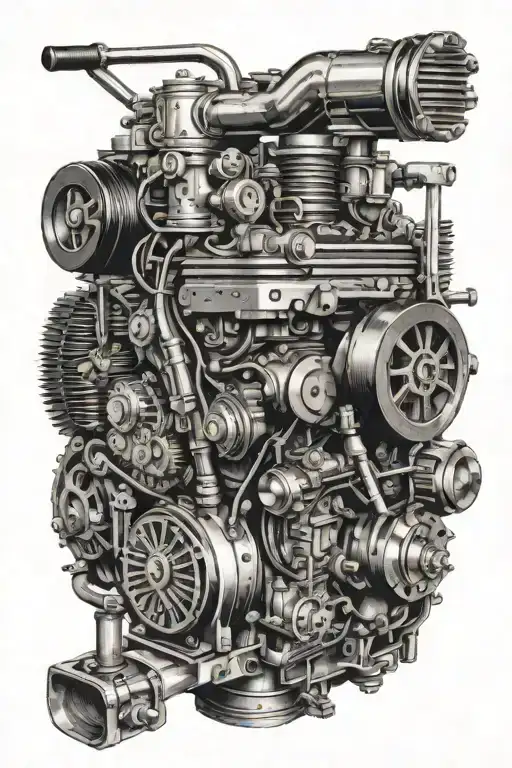

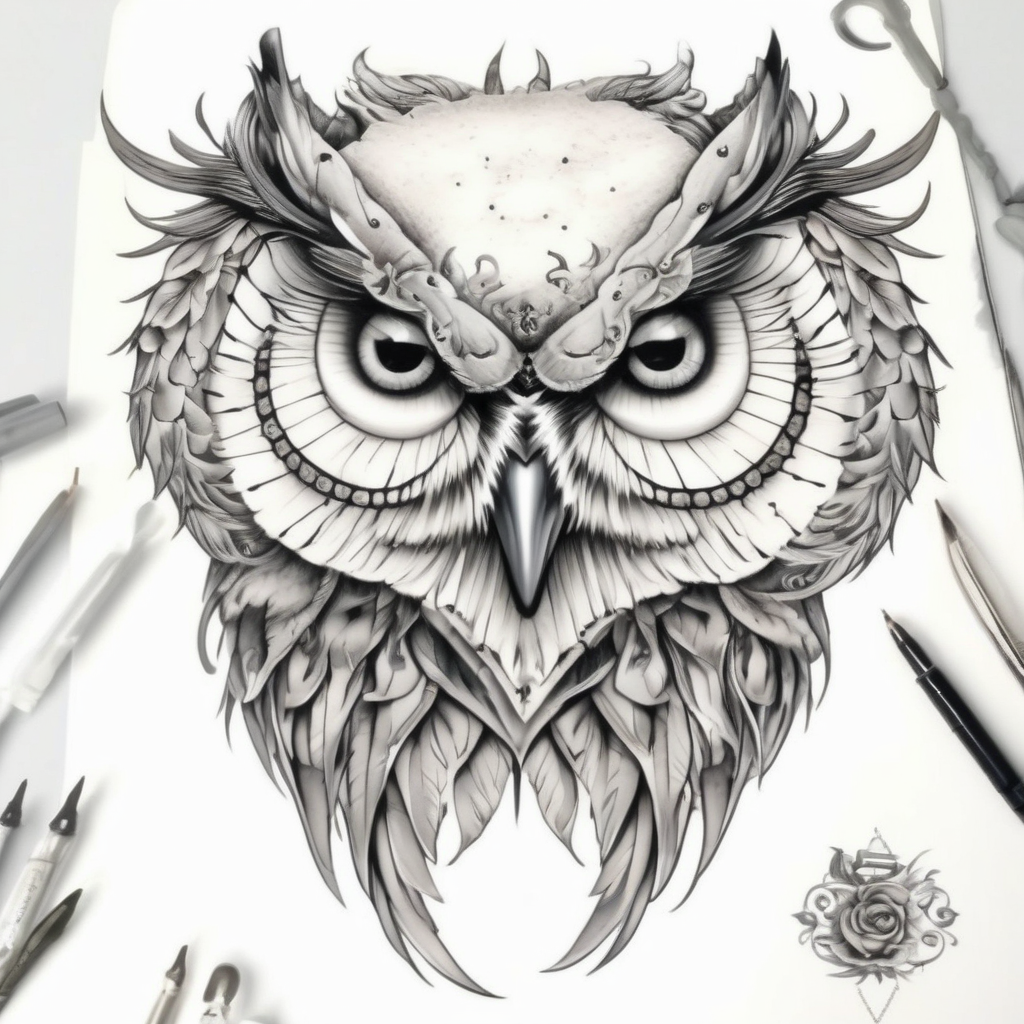

Now, in this example we're mixing wildly different styles in the reference images. I think this is often not going to be the desired workflow. Instead, let's take a look at a more practical use case - tattoo generation. I tried to create an owl tattoo design using 3 different reference image sets of different styles (with a style per column):

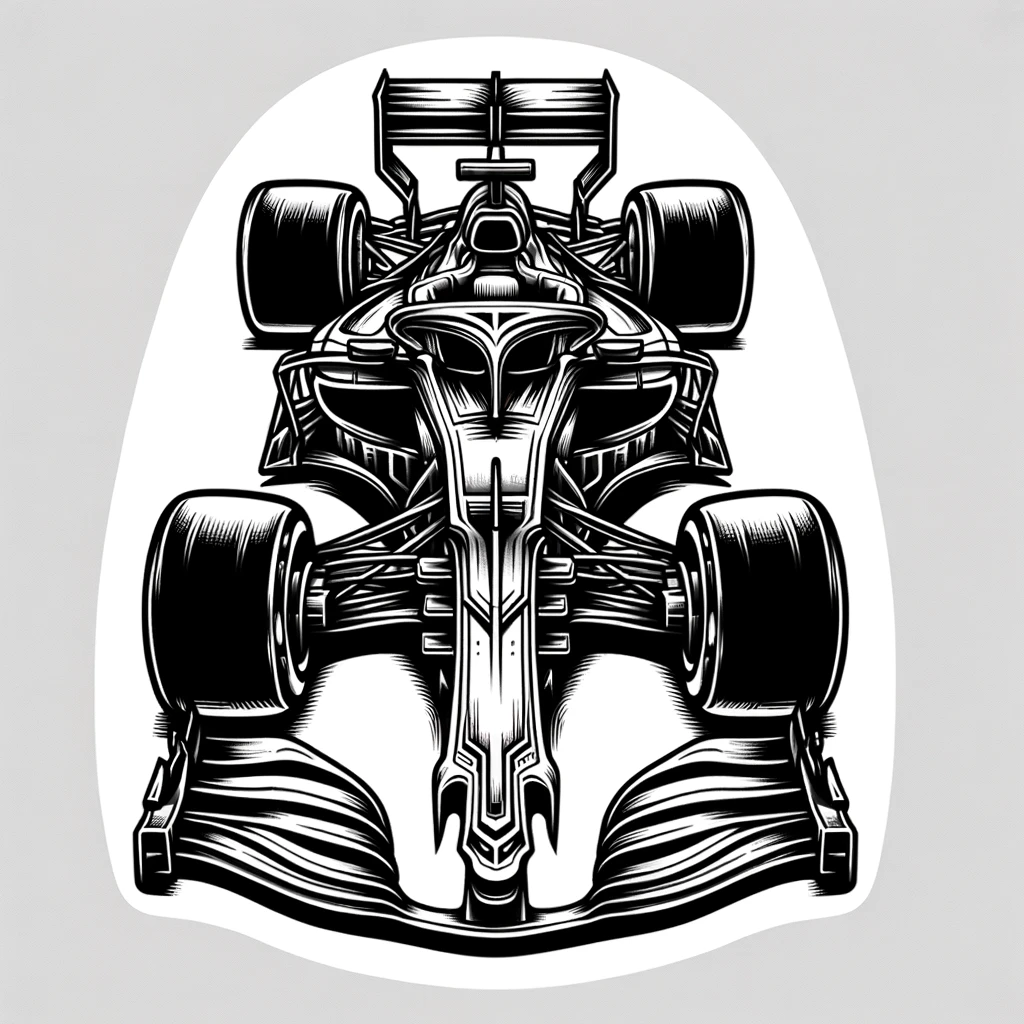

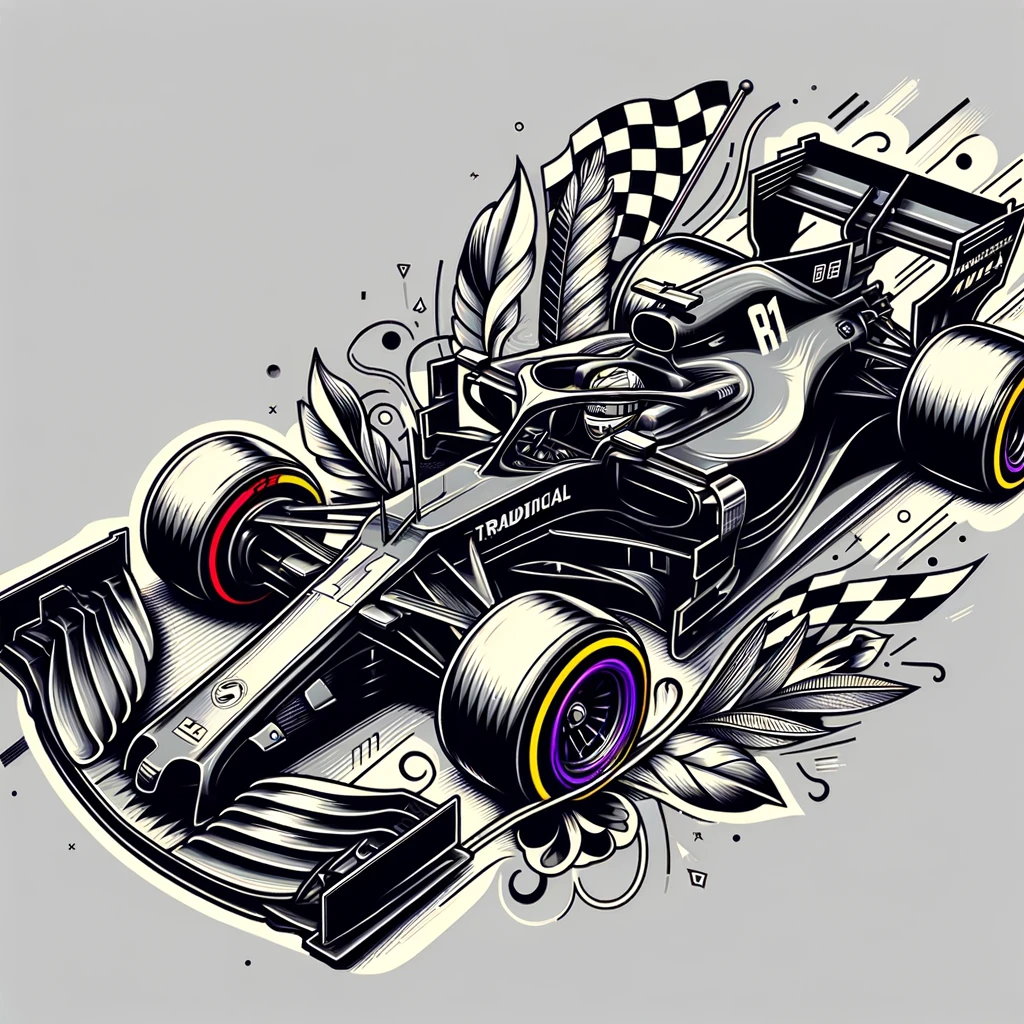

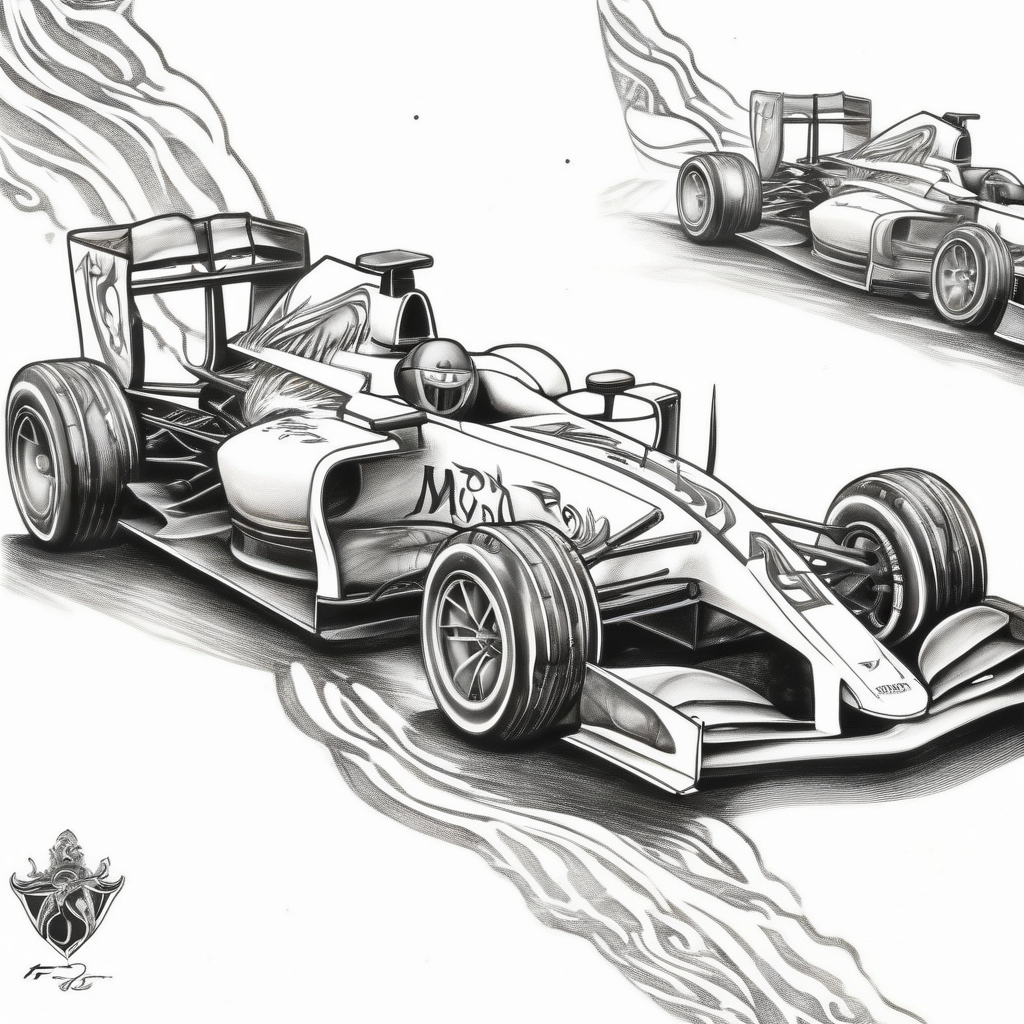

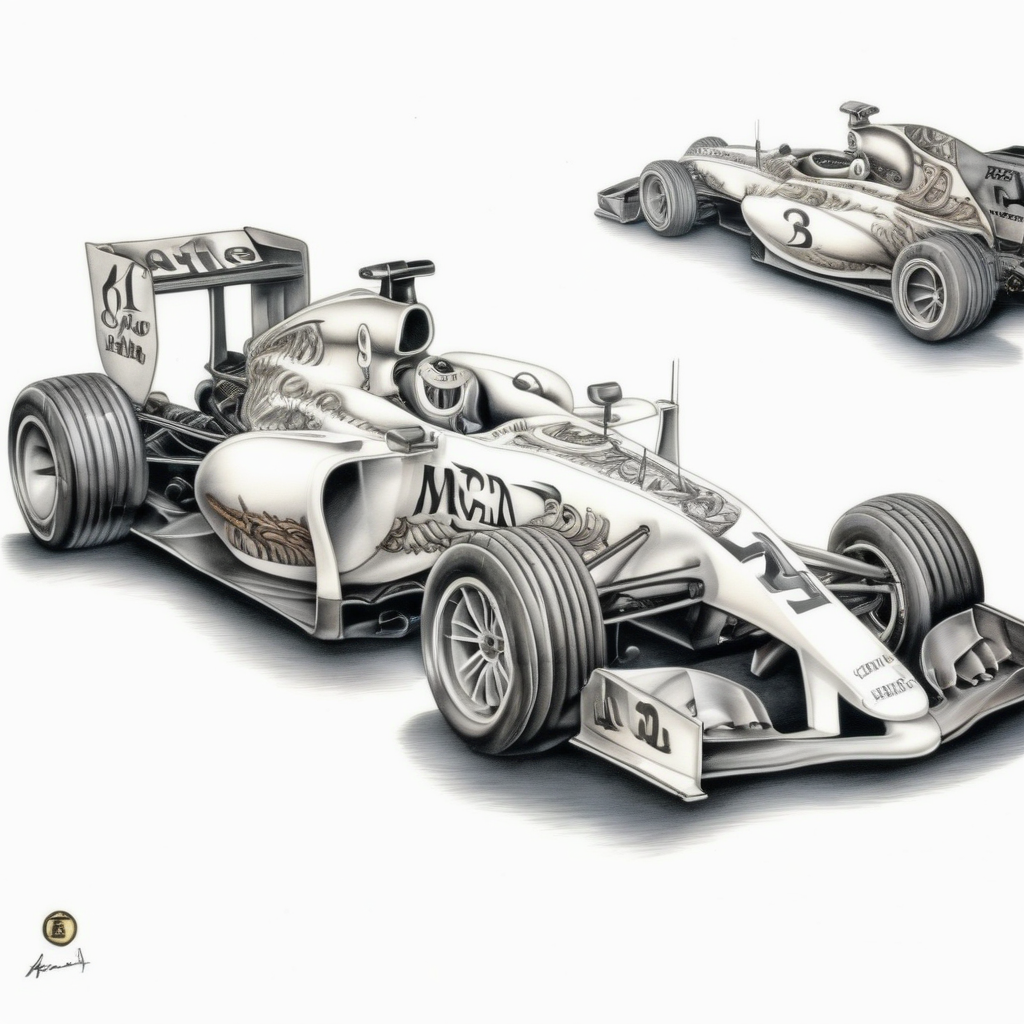

In comparison to attempts I've made to produce different styles using DALLE/ChatGPT, these are more stylistically varied. Here's another example using the 3 styles to produce an F1 car under each method (InstantStyle on the bottom):

It's also worth noting that here, the use of multiple style images seems to naturally help us avoid the "content bleeding" noticed above. For example, if we just use the tree from the "realism" styled tattoos, we would get the following:

Controlnet variation and contrasting pairs

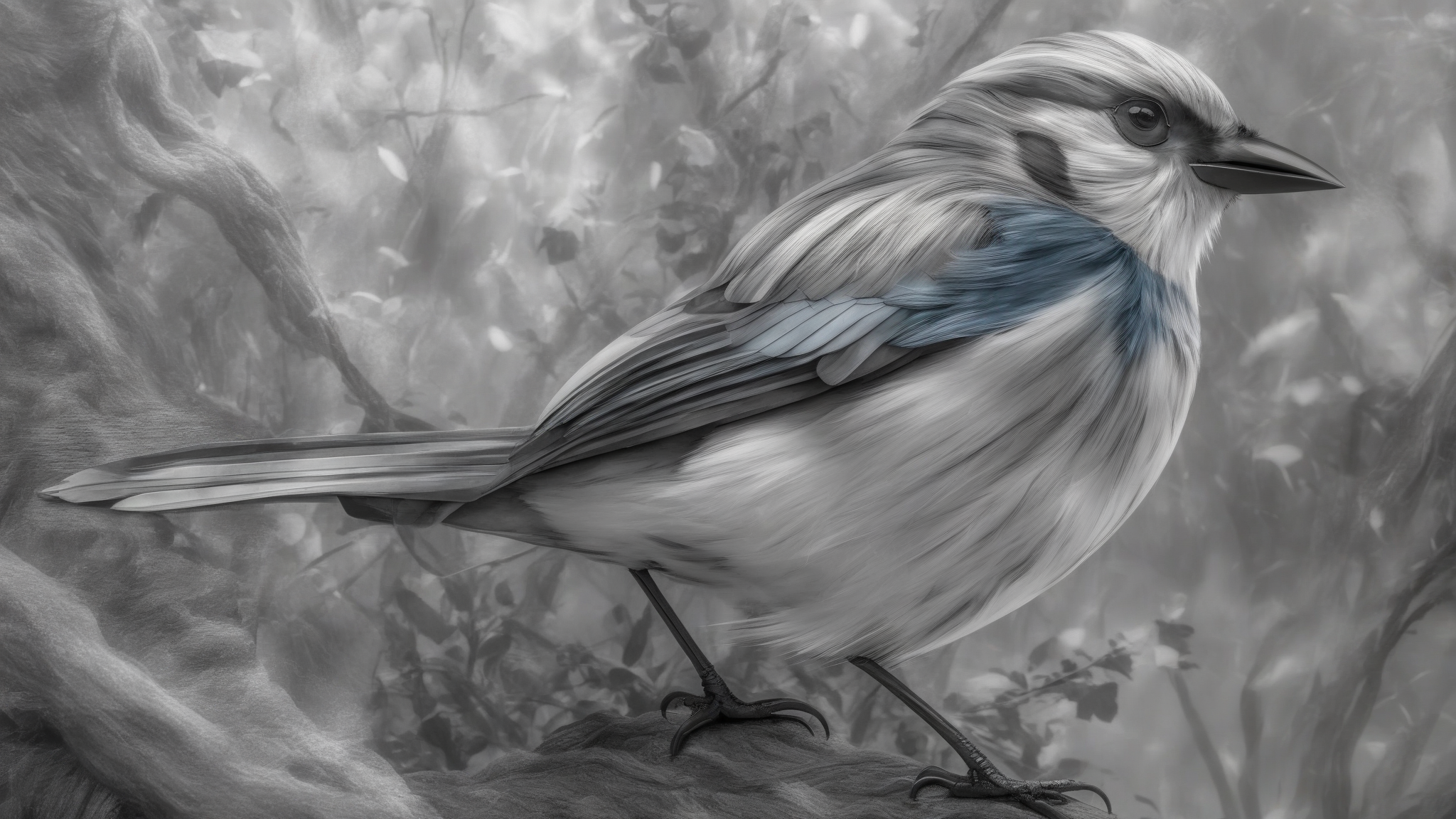

Next let's try a controlnet. Using this method, we use a source image as well as a reference. The source image's edges will inform the structure of the final result. Here, I'm using a blue and red bird (originally generated for my AI-powered art frame), and applied the cartoon and spiral styles:

Interesting as that may be, once again, let's try to get some mileage out of our ability to use multiple images. In this case, I'm going to add a "contrastive pair" of references - a full color and black and white version of the same image. The intention is to extract the idea of "black and white" from the pair by assigning equal-but-opposite weights to the pair of references. Hopefully, this results in ignoring all content and style info except for the way in which the pair contrasts - in this case, the color scheme.

For reference, here's the result if we change the prompt and negative prompt to encourage black and white (noting this was done naively, and could likely be improved with better prompting):

I think this demonstrates that, with the right weight, this "contrastive pairing" works pretty well! I think this may be the most interesting use case for multiple reference images and will definitely be experimenting more in this area. I think it would be interesting to use more costly/manual methods to produce such pairs and see if I can readily apply that same effect to a variety of images. Perhaps I can build out a set of "restyling" tools for the AI art frame.

Note: While I was working on this, InstantStyle was added to diffusers. This method is way easier to use, supports multiple images natively, and features masking. If you want to play with this, use that method.